Brainstorming with AI: early experiments

Peter Hartree

Peter Hartree

Epistemic status: Rough notes. I've spent some hours experimenting with this, but I feel I've barely begun.

Below are some ways to brainstorm with AI...

The minimalist method

- Open your default model.

- Say something like: “I’m thinking about X, because Y. Please brainstorm on Z.”

- (optional) Add context e.g. relevant Google Docs.

- (recommended) Send the same prompt to several other models.

Structured prompting with an interview step

Let's say you want to do a MECE brainstorm. You might try a prompt like this:

You'll probably want to do the interview step with a "standard" model, and then run the actual brainstorm with a "heavy" model.

Meta-prompting

The flow is:

- Brainstorm useful brainstorming techniques. Tell the AI what you're thinking about, and ask it to brainstorm the kinds of brainstorming techniques that might be most useful.

- Write a meta prompt. Ask the AI to write a meta-prompt that'll generate brainstorming prompts for the kinds of brainstorms you want to run (see Appendix 1 for an example).

- Run the brainstorm prompt. Try several models.

Vibe-code your brainstorm review interface

You could ask Claude to present brainstorm results in a custom UI (chat thread).

I've not figured out anything good yet, but maybe you can! 1

Multi-stage prompting with OpenAI Agent Builder

Perhaps you'd like to start several separate GPT-5 Pro brainstorms with a single prompt? Try prompt-chaining with OpenAI Agent Builder.

There is so much to figure out here

Some challenges:

- The consumer chat app interfaces are very bad for ambitious brainstorming. We need custom interfaces and model orchestration.

- There are many different use cases for brainstorming.

- Evaluating and iterating on prompts and models is difficult, because the outputs are long and difficult to grade.

- You need to filter for the good stuff, and present it in a way that's easy to engage.

Please share your ideas and experiences.

Appendix 1. Example meta prompt

The research topic I chose:

Example outputs (Claude Opus 4.1):

Appendix 2. A custom interface for brainstorming critiques

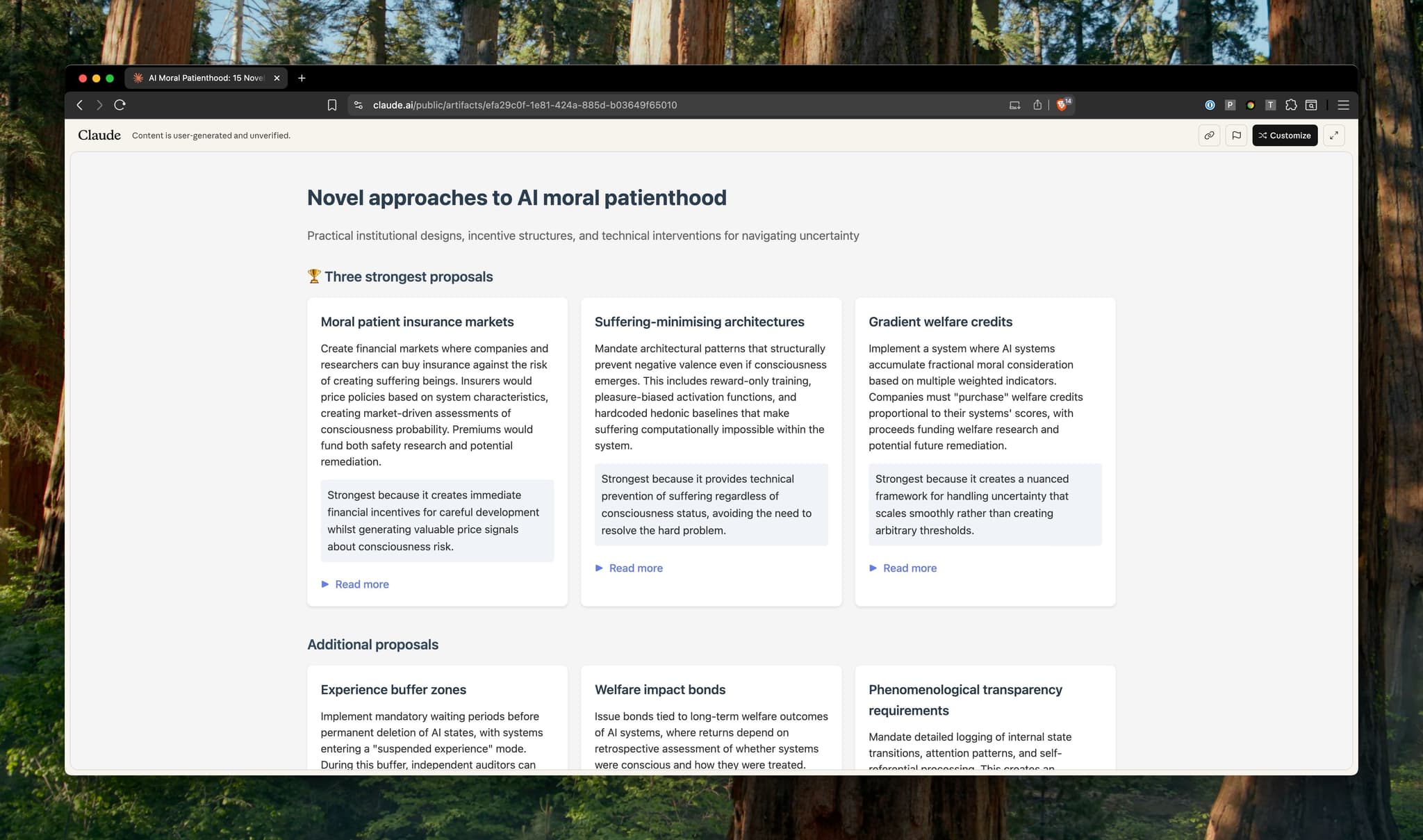

Here's a prototype I made for Forethought Research recently:

Footnotes

You could also build an interface for running prompts (c.f. prototyping with Claude artifacts). It's reasonable to prototype a prompting UI like this, but note that if you actually run brainstorm prompts via Claude artifacts, you can't use the strongest AI models. So you'd want to take the prototype and build a proper app with Replit, Cursor, Codex, or whatever. ↩