How to support AI adoption on your team

Peter Hartree

Peter Hartree

Epistemic status: early notes. This post will get a major update in December.

I think many teams should take AI adoption roughly as seriously as hiring. Reasons:

For many teams, 50-500 hours of investment in AI adoption will yield returns comparable to adding several junior staff. In some cases, an even higher ROI is possible. 1

People who rarely use LLMs can boost their individual output by 5-50% with 5-50 hours of focussed learning. This applies for staff at all levels, including your C-suite and your expert researchers.

If you're about to ask a generalist employee to spend weeks on a hiring round, consider asking them to push on internal AI adoption instead.

How to do that? Some ideas below...

1. Assign explicit responsibilities

- Give a senior staff member responsibility for driving AI adoption throughout the organisation.

- Consider asking them—or someone they manage—to work on this full-time.

- They should give weekly updates to the CEO.

- For every staff member:

- Add “AI adoption” to performance review and peer feedback cycles.

- Ask them to adopt the 5x rule, and request weekly updates on the recurring tasks they've considered for automation or augmentation.

- ... what else? Please send me your ideas.

- Further reading: Zapier adoption rubric; Shopify memo.

2. Make time

- People often say they have "no time" to experiment with AI. This is one of the strongest headwinds to adoption.

- Solution: weekly coworking sessions and quarterly hackathons for AI experiments and learning. Make them mandatory.

- Further reading: How Zapier rolled out AI.

3. Make a "#using-ai" Slack channel

- Make a Slack channel for sharing learnings, tips, links, products, questions, etc.

- Frame it so the bar for posting is low.

- Think: "thing that worked well", "nice blog post", "cool products", "any advice on X?".

- Use a separate channel to discuss formal initiatives e.g. a project to automate a workflow.

4. Encourage internal champions

- You may already have some natural "AI adoption champions" in your team.

- Encourage them to explicitly budget time for experimentation and supporting others.

- Add "support AI adoption" to their role description.

- Consider making this their full-time role.

- Add "AI show & tell" to your team meeting agendas.

- Further reading: OpenAI Academy; GitHub Resources.

5. Make it easy

- All staff should have:

- Access to the latest models on ChatGPT, Claude and Gemini.

- No need to think about usage limits.

- A $500+ monthly budget for trying new tools, courses and subscriptions.

- Someone to contact if they have questions.

- Create affordances for common use cases (e.g. shared projects, Claude Skills, prompt libraries, custom software tools).

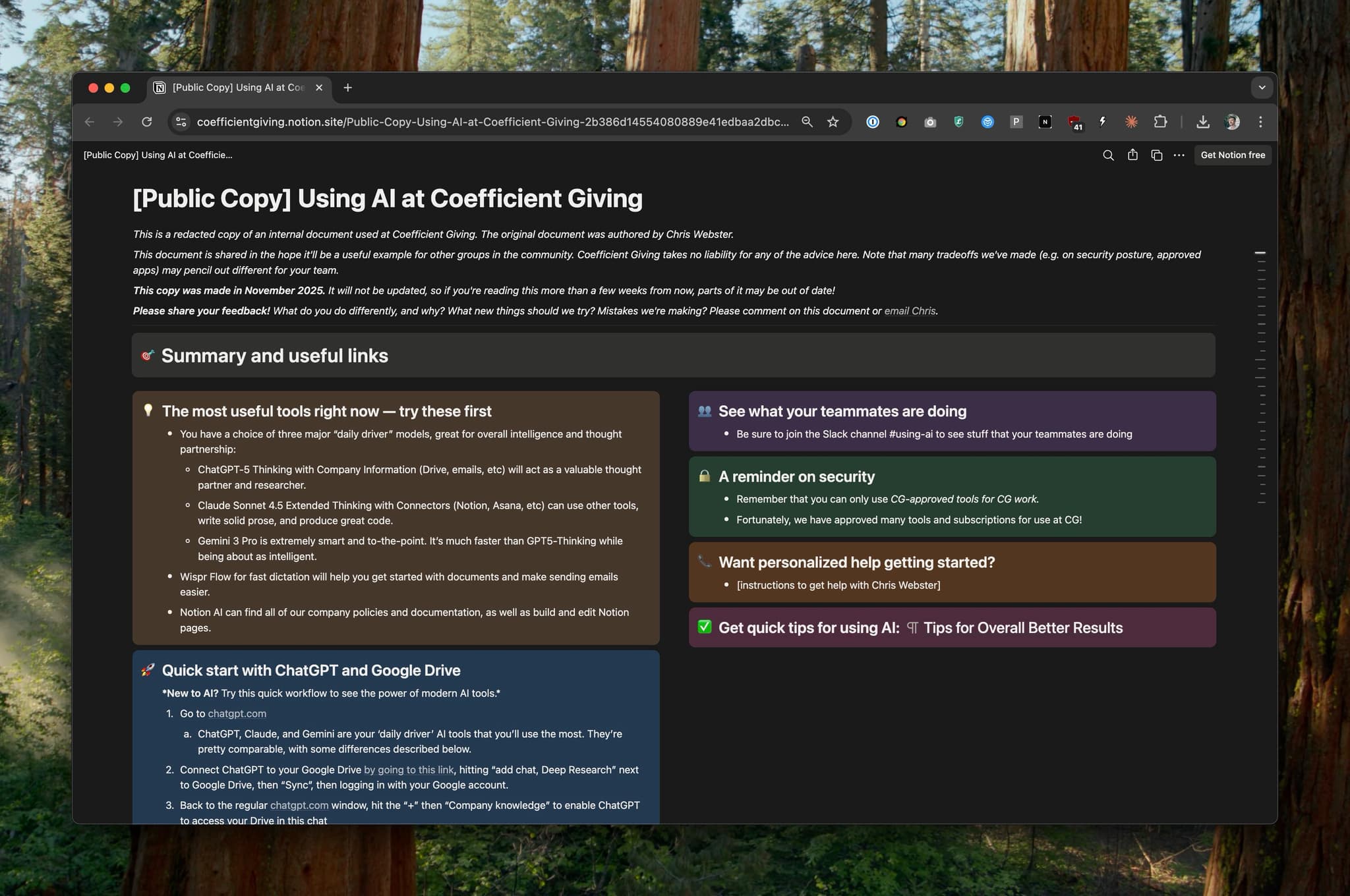

- Make your AI usage policies clear, simple and permissive (see example).

- Have a strong presumption in favor of enabling all staff to use workflow automation tools like Zapier and Make. Provide training if necessary.

- Evaluate new tools within 2 days of staff requests.

- Your risk assessments should include quantitative BOTECs.2

Your team should have clear guidelines on AI usage.

Appendix 1. Further reading

- Claude report ⭐

- Azeem Azhar: Seven lessons from building with AI ⭐

- Lenny's newsletter

- Twitter: Aaron Levie, Lenny Rachitsky

- Podcasts: Aaron Levie; more soon.

- OpenAI Academy

- Ethan Mollick

Appendix 2. Ambitious internal tooling

Some teams invest heavily in internal tooling. For example, Claude reports:

Stripe built an internal web application called LLM Explorer with a ChatGPT-like interface, rolled it out company-wide with proper security controls, and watched over 33% of employees adopt it within days. The tool now powers over 60 internal LLM applications across the company, with hundreds of reusable interaction patterns documented and shared.

The most popular feature is the prompt sharing and discovery system. Employees create and share prompts like the "Stripe Style Guide," which transforms any text to match company tone for emails, website copy, and presentations. This single prompt is used by account executives, marketers, and speakers across functions.

Footnotes

You do this by augmenting your existing staff and fully automating some workflows. I hope to share case studies from within the AI safety and EA communities soon; meantime see here for ten credible stories. ↩

Without them, you'll probably be too risk-averse. I recently spoke with two orgs that had both independently overreacted to the OpenAI NYT lawsuit, implementing a costly policy based on scope-insensitive qualitative reasoning. When prompted to BOTEC, they recognised the mistake as unambiguous. ↩